Why a dedicated AML legal dataset?

General-purpose AI systems are neither designed nor trained to reliably meet the specific legal and regulatory requirements of anti-money laundering and counter-terrorist financing (AML/CFT).

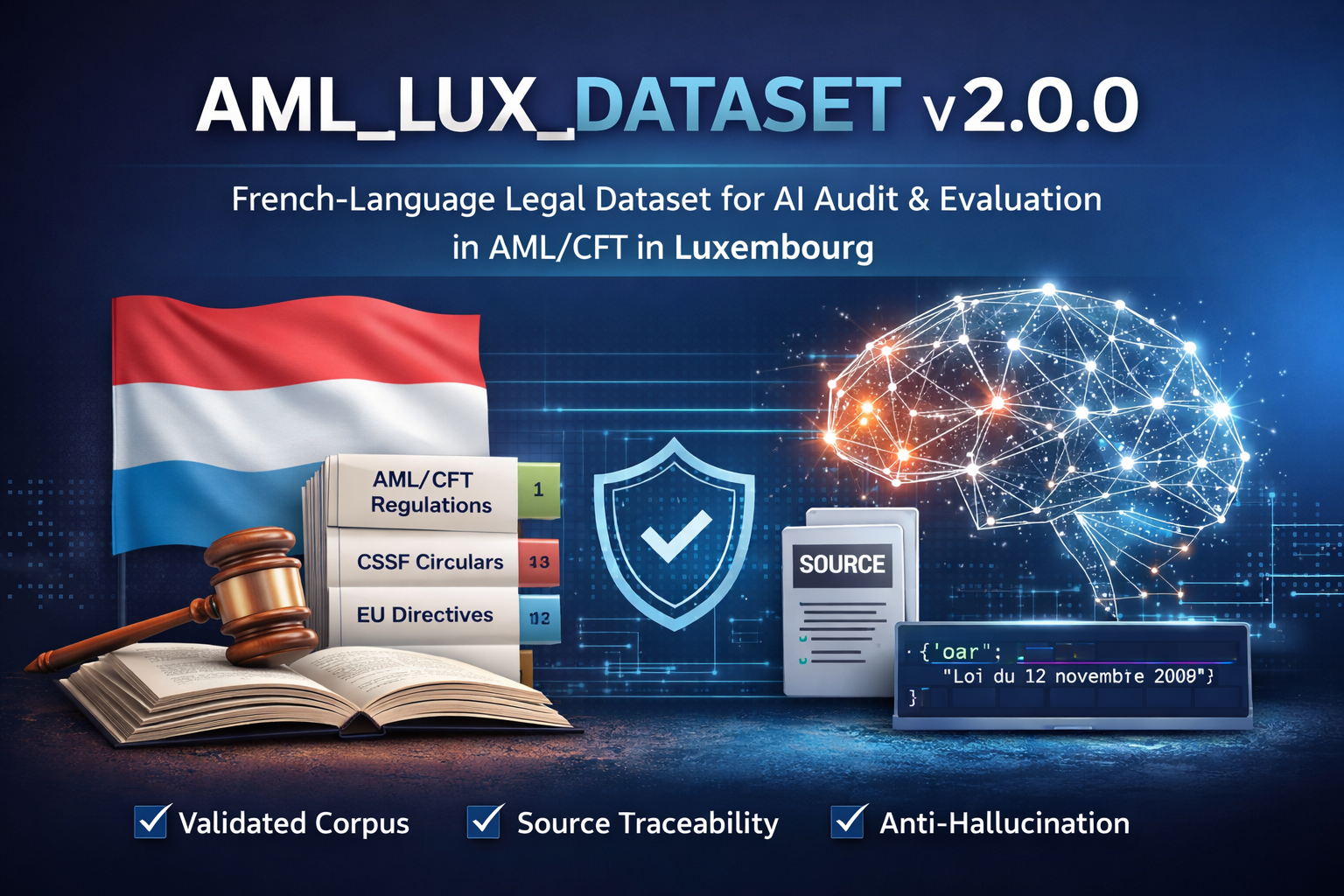

AML_LUX_DATASET v2.0.0 addresses a precise need:

- to assess the actual legal compliance of AI-generated responses,

- to measure the risk of out-of-corpus hallucinations,

- to objectively compare multiple models or RAG configurations,

- to document AI governance within an AI Act / internal control framework.

This dataset is not a simple question–answer collection:

it is a legally constrained AI audit tool.

Regulatory scope covered

The dataset is entirely based on a documented Luxembourg and European legal corpus, including in particular:

- Amended Law of 12 November 2004 (AML/CFT)

- Laws governing the Financial Intelligence Unit (FIU) and criminal sanctions

- CSSF circulars (12/02, 17/650, 18/702, etc.)

- European AML Directives (4th, 5th, and 6th AML Directives)

- International standards and recommendations (FATF)

📌 No response is produced outside this defined corpus.

Technical features of the dataset

Grounded and traceable dataset

Each answer is:

- generated under strict corpus constraints,

- supported by explicit citations,

- linked to normalized legal sources,

- structured for machine use (JSONL format).

Hallucination mitigation

The dataset includes:

- insufficient-context cases,

- deliberately blocked responses,

- an explicit and documented refusal logic.

➡️ Ideal for testing whether an AI system knows when not to answer.

Main use cases

🔍 Audit and benchmarking of legal AI systems

- Compare GPT, Claude, Mistral, and internal LLMs

- Test different RAG architectures

- Measure the regulatory robustness of AI outputs

🧠 Training and evaluation of local models

- Controlled fine-tuning

- Post-training evaluation

- Detection of out-of-corpus drift

💬 Compliance & AML chatbots

- Internal compliance assistants

- AML regulatory assistants

- Decision-support tools (non-decision-making)

📊 AI governance & AI Act compliance

- Documentation of AI risks

- Proof of information perimeter control

- Support for internal and external audits

Format & integration

- Format: JSONL

- Language: French legal language

- Version: v2.0.0 (frozen dataset)

- Compatibility:

- RAG frameworks (Chroma, FAISS, Pinecone…)

- Internal AI pipelines

- BULORA.ai audit tools

License & conditions of use

- Internal professional use only

- Redistribution prohibited

- No training of public AI models

- Contractual license provided with the dataset

➡️ See the Offers & Licenses page

Integration with BULORA.ai

AML_LUX_DATASET v2.0.0 is natively compatible with BULORA.ai modules:

- Reasoning

- Source

- Robustness

- Temporal

- Disagreement

It can also be used independently of the platform.

Access & demonstration

Would you like to test this dataset on your own models or use cases?

➡️ Contact us for a demo: contact(@)bulora.ai

➡️ Request an evaluation access: contact(@)bulora.ai